from sklearn.preprocessing import OrdinalEncoder

import pandas as pd

from pureml.decorators import dataset, transformer, load_data

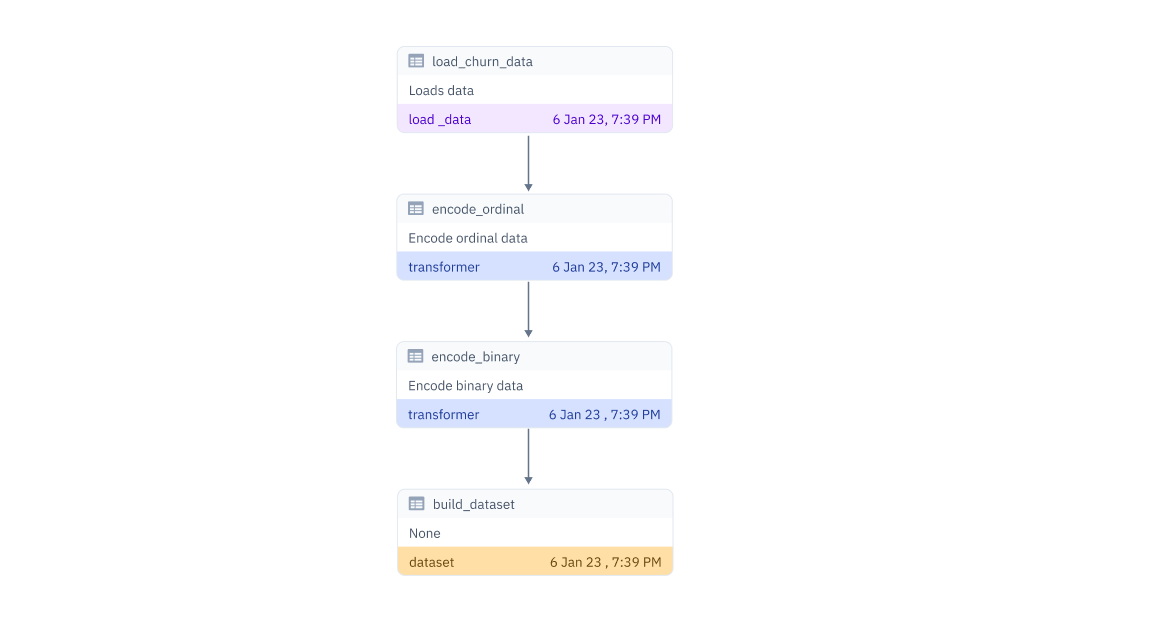

@load_data()

def load_churn_data():

'''

Loads data

'''

df = pd.read_csv('./bigml_59c28831336c6604c800002a.csv')

return df

@transformer()

def encode_ordinal(df):

'''

Encode ordinal data

'''

col_ord = ['state', 'phone number']

df_ord = df[col_ord]

feat = OrdinalEncoder().fit_transform(df_ord)

df[col_ord] = feat

return df

@transformer()

def encode_binary(df):

'''

Encode binary data

'''

df['voice mail plan'] = df['voice mail plan'].map({'yes':1, 'no':0})

df['international plan'] = df['international plan'].map({'yes':1, 'no':0})

df['churn'] = df['churn'].map({True:1, False:0})

return df

@dataset('telecom_churn')

def build_dataset():

'''

None

'''

df = load_churn_data()

df = encode_ordinal(df)

df = encode_binary(df)

return df

df = build_dataset()